"Fast Mode" for Claude Opus 4.6, 2.5x Speed at 6x the Price

Joseph Nordqvist

February 8, 2026 at 5:00 PM UTC

6 min read

Anthropic announced on February 7 that it is making a faster version of its flagship Claude Opus 4.6 model available as a research preview. The feature, called "fast mode," delivers up to 2.5 times higher output token generation speed at a significant premium: six times the standard Opus 4.6 pricing.[1]

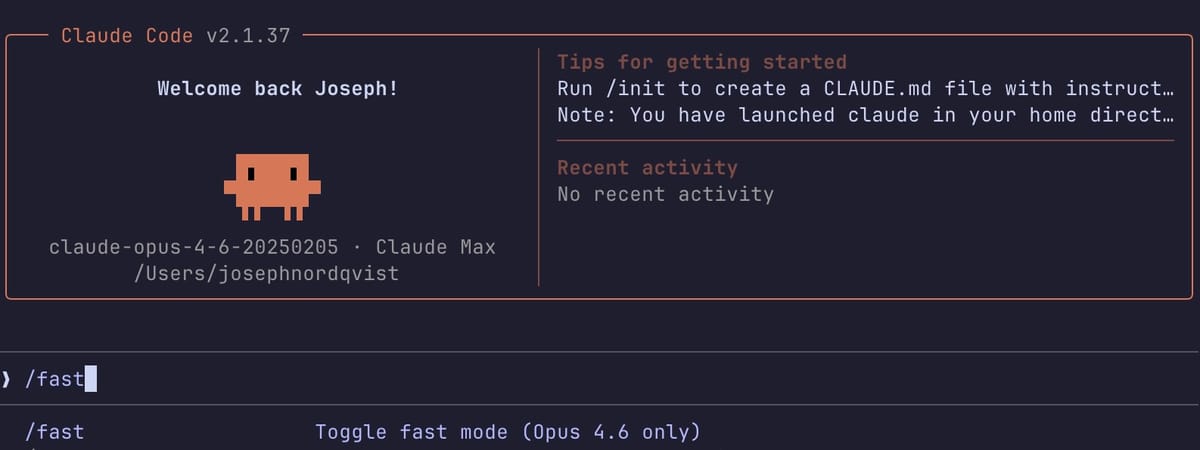

Fast mode is available now in Claude Code via the /fast command and through Anthropic's API using the speed: "fast" parameter.[2]

It is also available in research preview across several third-party developer tools, including Cursor, GitHub Copilot, Figma, Lovable, Windsurf, and v0.

The research preview targets developers working on time-sensitive tasks.

A temporary 50% discount runs through February 16.[2]

What Fast Mode Does

Fast mode runs the same Opus 4.6 model with a different inference configuration that prioritizes speed over cost efficiency. According to Anthropic's documentation, the feature delivers up to 2.5 times higher output tokens per second compared to the standard configuration.[1]

The speed improvement applies specifically to output token generation, not to time-to-first-token (the delay before the model begins responding). The model's intelligence and capabilities remain unchanged; Anthropic describes it as the same model weights and behavior, just faster output.

Fast mode is separate from the existing "effort" parameter, which controls how deeply the model reasons before responding. The two can be combined: a developer could use fast mode with a lower effort level for maximum speed on straightforward tasks, or fast mode with high effort for rapid but thorough responses.

Pricing

Standard Opus 4.6 is priced at $5 per million input tokens and $25 per million output tokens. Fast mode multiplies those rates by six for contexts within the standard 200,000-token window:

Standard context (≤200K tokens): $30 per million input tokens / $150 per million output tokens[2]

Extended context (>200K tokens): $60 per million input tokens / $225 per million output tokens[2]

Fast mode pricing stacks with other modifiers, including prompt caching multipliers and data residency surcharges. Prompt caches are also not shared between fast and standard speed requests, meaning switching between modes invalidates cached prefixes.

How It Works in Claude Code

For Claude Code users on paid subscription plans (Pro, Max, Team, or Enterprise), fast mode is available exclusively through "extra usage" billing; the consumption-based pricing that kicks in beyond a plan's included usage limits. It is not deducted from standard subscription rate limits.

Users toggle fast mode on with the /fast command. The setting persists across sessions. When fast mode is enabled, Claude Code automatically switches to Opus 4.6 if the user is on a different model.

A notable cost detail from Anthropic's documentation: switching into fast mode mid-conversation reprices the entire conversation context at fast mode's uncached input token rate.[2] This means enabling fast mode partway through a long session costs more than starting with it enabled from the beginning.

When fast mode rate limits are exceeded, it automatically falls back to standard Opus 4.6 speed with a cooldown period. When the cooldown expires, fast mode re-enables automatically.

Availability and Limitations

Fast mode is not available through Amazon Bedrock, Google Vertex AI, or Microsoft Azure Foundry — the major third-party cloud platforms that typically offer Claude. It is currently limited to Anthropic's own API and Claude subscription plans with extra usage enabled.

API access is currently limited. Anthropic has published a waitlist for developers seeking access, and says availability may change as it gathers feedback during the research preview period.

The feature is also not compatible with the Batch API (Anthropic's discounted asynchronous processing option) or Priority Tier service. At the API level, when fast mode rate limits are exceeded, the API returns a 429 error. Anthropic's SDKs automatically retry up to two times by default, and since fast mode uses continuous token replenishment, the delay is typically short. Developers who prefer not to wait can implement their own fallback to standard speed, though this results in a prompt cache miss.

The $50 Credit Connection

The fast mode launch arrives alongside a separate promotion Anthropic announced earlier this week: $50 in free extra usage credits for existing Pro and Max subscribers who signed up by February 4. The credits can be used for any extra usage, including fast mode, and expire 60 days after claiming.

The overlap has not gone unnoticed. Discussion on Reddit's r/ClaudeAI subreddit suggests many users view the credits as a way to encourage trial of fast mode before the introductory discount expires on the same date (February 16) that the credits must be claimed.[3]

Community Reaction

The response from the developer community has been divided along a predictable line: individual developers and hobbyists have balked at the pricing, while enterprise-focused users see clear value.

On Reddit's r/ClaudeAI subreddit, the dominant sentiment is that fast mode is designed for corporate environments where developer time is expensive and the company absorbs the cost.[3] Several users reported that their employers would adopt it without hesitation for time-sensitive tasks like live debugging.

Criticism has focused on what some users describe as aggressive pricing mechanics, particularly the mid-conversation repricing when toggling fast mode on, and the auto-renewal after cooldown that can trigger another repricing if context has grown. A widely circulated post on the subreddit laid out these details, characterizing the feature as "not for you" if you are paying out of pocket.[3]

Some users have requested the opposite feature: a "slow mode" that trades speed for cost savings below the standard rate, aimed at non-urgent, background tasks.[3]

Why This Matters

Fast mode represents Anthropic's first foray into speed-tiered pricing for its models; charging more for the same intelligence delivered faster. It is a pricing model familiar in other industries (expedited shipping, priority processing) but relatively new in the AI API space.

It shows that as models become capable enough for extended autonomous work, the bottleneck shifts from intelligence to throughput. For developers running multi-agent workflows or iterating rapidly on code, the time saved by faster output may justify the premium; particularly when the cost is measured against developer salary rather than a personal budget.

Whether fast mode becomes a standard offering or remains a niche premium feature will likely depend on adoption during the research preview and how Anthropic adjusts pricing after the promotional period ends.

Outlook

Fast mode is labeled a research preview, and Anthropic has stated that the feature, its pricing, and its availability may change based on feedback. The introductory 50% discount expires on February 16, after which the full pricing takes effect.

Anthropic has indicated it plans to expand API access to more customers over time. Third-party cloud platform availability has not been announced.

Written by

Joseph Nordqvist

Joseph founded AI News Home in 2026. He holds a degree in Marketing and Publicity and completed a PGP in AI and ML: Business Applications at the McCombs School of Business. He is currently pursuing an MSc in Computer Science at the University of York.

This article was written by the AI News Home editorial team with the assistance of AI-powered research and drafting tools. All analysis, conclusions, and editorial decisions were made by human editors. Read our Editorial Guidelines

References

- 1.

- 2.

- 3.

Was this useful?